WebGPU Voxel Fractals

Addison Prairie / July 23

One of the projects I have been most eager to revisit is "WebGL Voxel Fractals". It was my first time working with acceleration structures and real time rendering, and I have many ideas for how I could improve the project. Additionally, with WebGPU supporting rolling out with Chrome 113, a lot of work that originally would have occupied the CPU thread can be moved onto the GPU via compute shaders.

The demo page for the project can be found here. The "help" button on the UI will open up a window that explains the controls, how to interact with the scene, and gives some notes about the procedural generation algorithm. Additionally, the source code is available here.

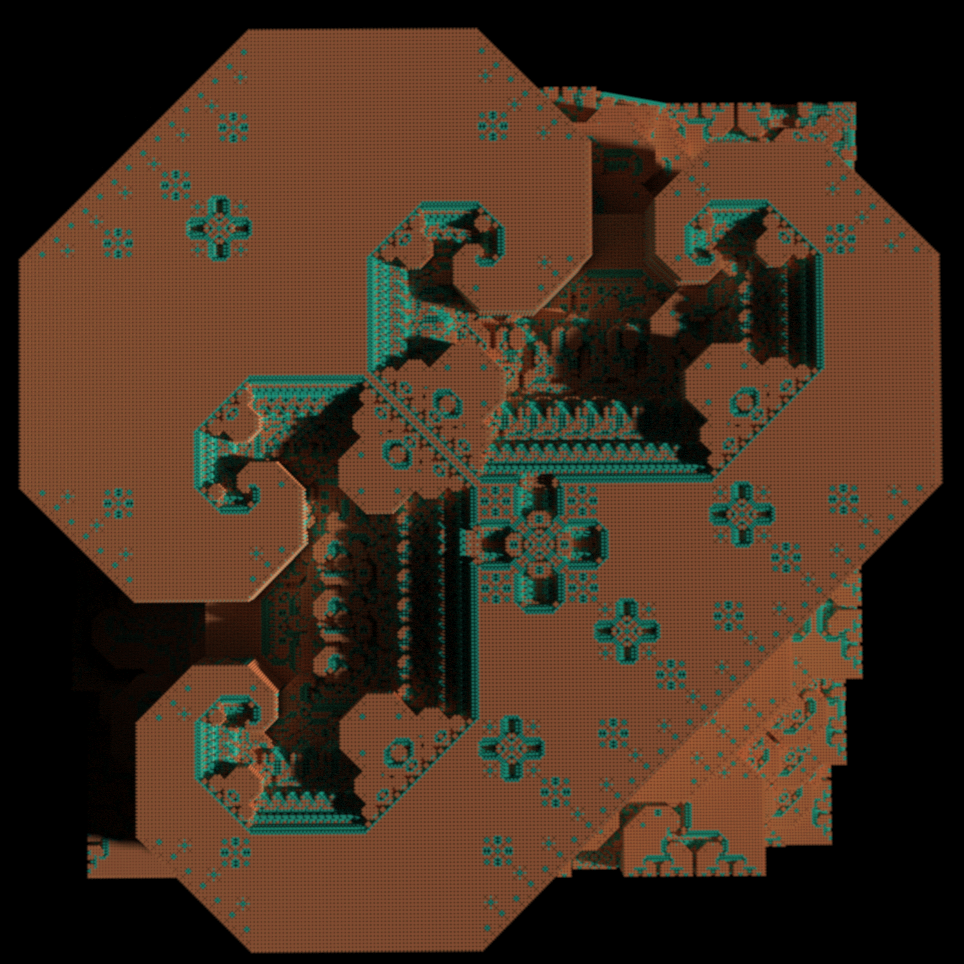

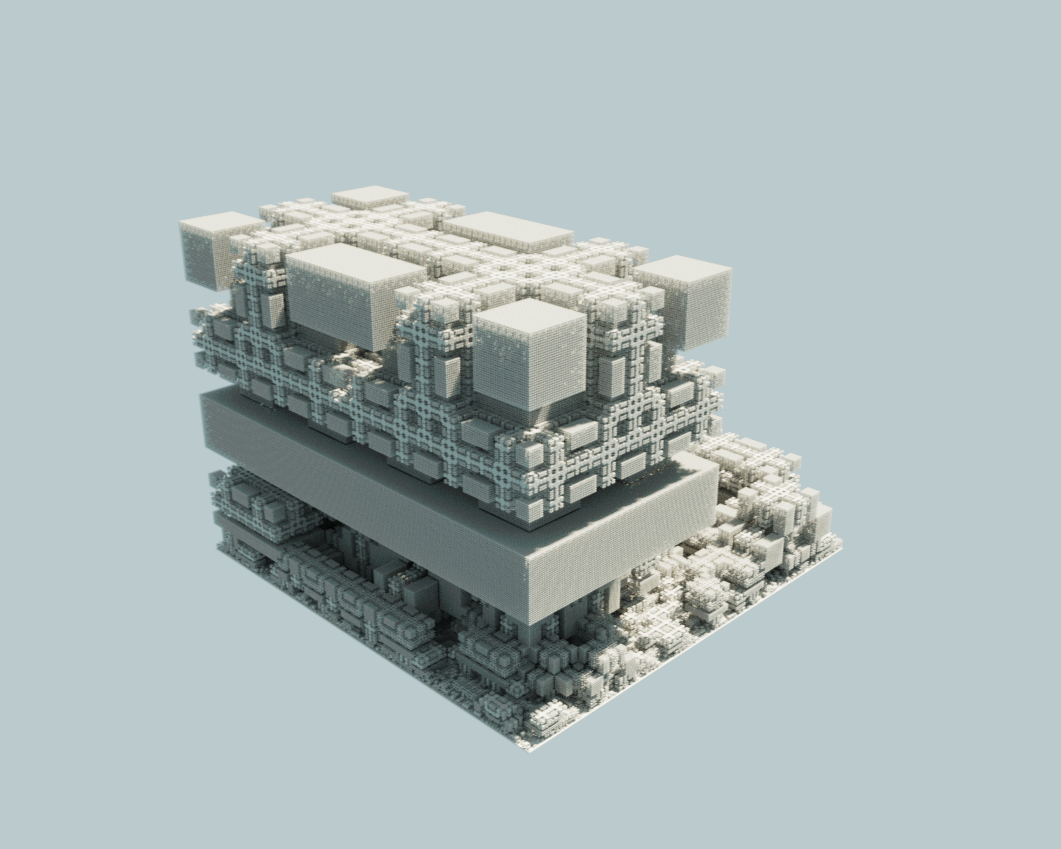

Pathtraced 512x512x512 voxel fractal. Generated in ~15s and rendered in ~5s

1. Voxels & Voxel Acceleration Structures

The most performance critical part of the rendering loop is the ray-scene intersection function. While it seems that the conventional wisdom is that Sparse Voxel Octrees (SVOs) are the ideal acceleration structure for large voxel volumes, their design carries some disadvantages that are only overcome by extremely large scenes. Some of these disadvantages include:

- Incoherent Memory: inherent in any tree/pointer-based acceleration structure is the tendency for a thread to jump around somewhat randomly in memory while traversing the acceleration structure. This takes a significant toll on performance.

- Long Construction Time: it is difficult to construct a sparse voxel octree on the GPU efficiently, since writing to a sparse structure requires inter-thread communcation and memory coherency.

- Slow to edit: sparse voxel octrees are slow to edit, since any manipulation to the structure may require the deletion or insertion of nodes into the structure.

Given these limitations, and the capabilities of modern GPUs, I decided to use a dense voxel octree (DVO) to accelerate ray tracing. Basically, rather than storing different levels of details as different heights in an SVO, a DVO stores a dense (think linear array) representation of the scene at every level of detail. Consider the following example of a 2D scene:

+---+---+---+---+---+---+---+---+ | 1 | 1 | | | | | | | +---+---+---+---+---+---+---+---+ | | | | | | | | | +---+---+---+---+---+---+---+---+ | | | 1 | | | | | | +---+---+---+---+---+---+---+---+ | | | | | | | | | +---+---+---+---+---+---+---+---+ | 1 | 1 | | | | | | | +---+---+---+---+---+---+---+---+ | 1 | 1 | | | | | | | +---+---+---+---+---+---+---+---+ | | | | | | | | | +---+---+---+---+---+---+---+---+ | | | | | | | 1 | | +---+---+---+---+---+---+---+---+

The sparse voxel octree (or quadtree in this example) would for this scene would look something like this:

+---+---+

| 1 | 0 |

+---+---+ (LOD 3)

| 1 | 1 |

+---+---+

|

+---------------+-----------+

| | |

+---+---+ +---+---+ +---+---+

| 1 | 0 | | 1 | 0 | | 0 | 0 |

+ --+---+ +---+---+ +---+---+ (LOD 2)

| 0 | 1 | | 0 | 0 | | 0 | 1 |

+---+---+ +---+---+ +---+---+

| | |

+----+----+ | |

| | | |

+---+---+ +---+---+ +---+---+ +---+---+

| 1 | 1 | | 1 | 0 | | 1 | 1 | | 0 | 0 |

+---+---+ +---+---+ +---+---+ +---+---+ (LOD 1)

| 0 | 0 | | 0 | 0 | | 1 | 1 | | 1 | 0 |

+---+---+ +---+---+ +---+---+ +---+---+

Whereas the dense representation in memory would look like this:

+---+---+---+---+---+---+---+---+ +---+---+---+---+ +---+---+

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | | 1 | 0 | 0 | 0 | | 1 | 0 |

+---+---+---+---+---+---+---+---+ +---+---+---+---+ +---+---+

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | 0 | 1 | 0 | 0 | | 1 | 1 |

+---+---+---+---+---+---+---+---+ +---+---+---+---+ +---+---+

| 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | | 1 | 0 | 0 | 0 | (LOD 3)

+---+---+---+---+---+---+---+---+ +---+---+---+---+

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | | 0 | 0 | 0 | 1 |

+---+---+---+---+---+---+---+---+ +---+---+---+---+

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | (LOD 2)

+---+---+---+---+---+---+---+---+

| 1 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

+---+---+---+---+---+---+---+---+

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

+---+---+---+---+---+---+---+---+

| 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

+---+---+---+---+---+---+---+---+

(LOD 1)

Clearly, the dense representation has the potential to take up significantly more memory; however, it in turn does not suffer from any of the issues listed above. In WebGPU, the dense voxel octree can be stored in a 3D texture, and the higher LODs can be stored as MIP maps of that texture. As a set of rays travelling in roughly the same direction from the same position traverses the octree (like primary visibility rays), they will read from texture coordinates nearby eachother, which is much friendlier for the GPU texture units/caches. Additionally, it requires significantly (up to 75% less) less memory than a sparse structure because the dense representation understands the memory location of the next node to traverse implicitly (from its position) and thus does not need read a pointer in memory.

The acceleration structure can pretty easily be built in parallel in a compute shader. One difficulty that arises in WebGPU is that there are some requirements for memory alignment when copying a storage buffer to a texture. First, WebGPU does not let you write to a byte in memory from a compute shader. So, if you want to store the higher LOD representations of your scene as bytes, you need to find some way to get around this limitation; for me, it was to build the acceleration structure first as u32s in an intermediate buffer, and then have another compute pass "pack" this structure into a format ready to be copied to a texture of bytes.

Pathtraced 512x512x512 voxel fractal. Generated in ~15s and rendered in ~5s

2. Traversing the Octree

Since the most performance critical portion of the rendering loop is the ray-scene intersection function, it is important that our octree traversal algorithm is optimized. For this project, I focused on optimizing a single traversal function which was loosely based on a voxel DDA. In the future, I'd like to revisit this project to test many different types of traversal functions to find which works best with a dense voxel octree.

A basic voxel DDA operates by storing a variable, in my case called "dist", which for each x, y, z, stores the distance along the ray we must step to reach the next voxel edge parallel to the plane normal to that axis. A basic example in two dimensions would look like this:

+----------+---> y | |/ | [0, 0] B KEY | /| +---------------------+ | / | | * = ray origin | +-------A--+ | / = ray direction | | / | | A/B = intersections | | / | +---------------------+ | * | | [1, 0] | +----------+ | | v x

With two dimensions, "dist" is a vector of two dimensions. In this case, the line that A is perpendicular to the x axis, so dist.x is the distance along the ray to reach A. Similarly, since B sits on a line perpendicular to the y axis, dist.y is the distance along the ray to reach B. To know which voxel to step into next, we just need to check which component of "dist" is least; in this case, the next step would be decreasing x by 1, moving from [1, 0] to [0, 0].

I won't go into much more detail around the original DDA algorithm, since it is very well described in other places. Here is a basic outline of my traversal function:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

fn trace(o : vec3f, d : vec3f) -> bool {

//the starting LOD, log_2(SCENE_SIZE)

var lod = STARTING_LOD;

//usual DDA stuff

var ipos = vec3i(floor(o)) >> vec3u(lod);

var idir = abs(1. / d) * f32(SCENE_SIZE / 2);

var dist = (sign(d) * (vec3f(ipos) - o * (1. / f32(vsize))) + sign(d) * .5 + .5) * idir;

var step = vec3i(sign(d));

//used for updating dist at lod change

const DESCENT_MASK = vec3i(vec3f(d < vec3f(0.)));

var mask : vec3b;

for (var i = 0; i < MAX_STEPS; i++) {

//fetch current node from memory

var node = loadNode(ipos, lod);

var octant = ipos & vec3i(1);

var octantMask = 1u << dot(octant, vec3i(1, 2, 4));

//check if we hit something

if ((node & octantMask) > 0) {

//if we cannot go to a lower lod, we hit a voxel

if (lod == 0u) {

return true;

}

var tmin = max(dot(dist - idir, vec3f(mask)), 0.);

var wpos = o + d * tmin + eps * vec3f(mask);

//decrement LOD and update ipos

ipos = vec3i(floor(wpos)) >> vec3u(--lod);

//based on the octant we end up in, we must update "dist"

var changeMask = vec3f((ipos & vec3i(1)) == DESCENT_MASK);

idir *= .5;

dist -= changeMask * idir;

continue;

}

//usual DDA stuff

mask = dist <= min(dist.zxy, dist.yzx);

ipos += vec3i(vec3f(mask)) * step;

//all of the directions such that a step in that direction would

//we should ascend the octree

var exitMask = (vec3i(1) - DESCENT_MASK) == octant;

var bAscend = any(exitMask & mask);

//if the node is empty or we exited, go up a level in the octree

if (node == 0u || bAscend) {

dist += vec3f(!exitMask) * idir;

idir *= 2.;

lod += 1;

ipos = ipos >> vec3u(1u);

mask &= vec3b(ascend);

if (isOutOfBounds(ipos, lod)) {

break;

}

}

dist += vec3f(mask) * idir;

}

return false;

}

The first thing to note is that I chose to store 2x2x2 chunks of voxels in memory as bytes, meaning to tell whether a voxel is filled, you would first need to fetch the byte which contains it (line 17), then calculate the voxel's position in the 2x2x2 block and check the bit at that index within the byte (lines 19-20, 23). For example, to check whether the voxel at v = [63, 2, 1] was filled, we would look at the dot(v & 1, [1, 2, 4]) = 1 + 4 = 5th bit of the byte which corresponds to that voxel.

A decent chunk (lines 6-9, 44-45, 66) of the function is standard DDA stuff; the rest of the code allows the DDA to seamlessly traverse the scene at different levels of detail. As far as I can tell, this strategy for traversing a voxel scene at multiple levels of detail is somewhat new, so I'll give a short explanation here of the ideas behind why it works:

- If we go down a level of detail (lines 23-40), we must calculate our new world position (line 30) to determine the new voxel we are in. We can use "dist", but must subtract the last step we took (i.e., "idir * vec3f(mask)", since "mask" is stored across loop iterations). Next, we calculate the current voxel in line 33. Finally, see that it is possible that "dist" must be updated based on where we descend to; however, we know this change from "DESCENT_MASK" and the octant of the voxel position.

- If we go up a level of detail (lines 54-63), it is easier to update our voxel position (line 57). Again, we will likely have to update "dist". First, in line 54, we update "dist" so that every component of "dist" which is not giving the distance to the edge of the current 2x2x2 block is updated. Additionally, "dist" must be updated again based on the step we just took, since if a step at the current LOD caused us to exit our current 2x2x2 block, then it must step into the next 2x2x2 block (line 66). Note that this should not be done if we are ascending a level only because our current 2x2x2 block is empty ("node == 0u"); so, if that is the case, "mask" is set to [false, false, false] (line 59).

Pathtraced 512x512x512 voxel fractal. Generated in ~15s and rendered in ~5s

3. Iterations

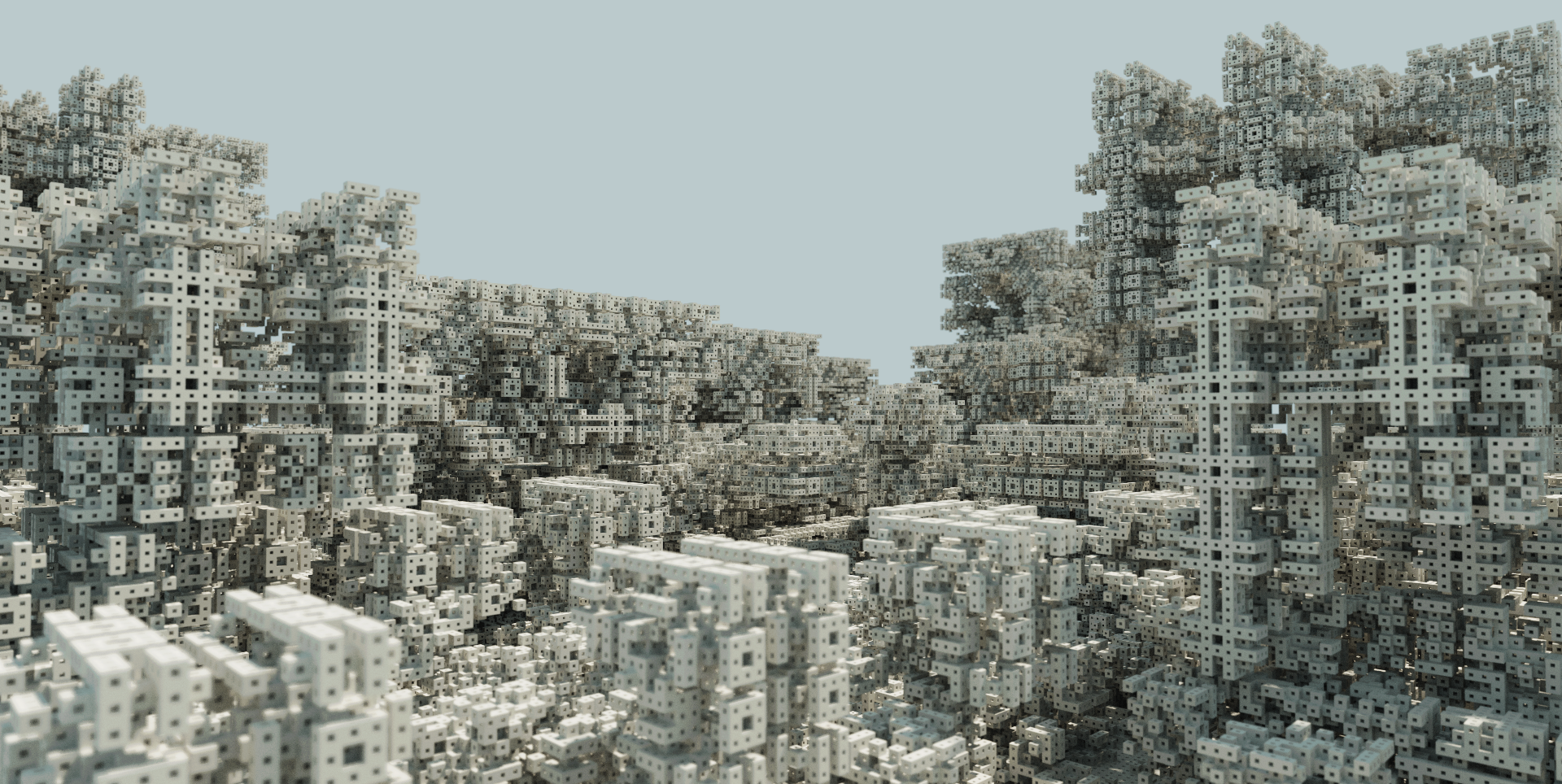

When I first started this project, I was planning on developing it into a real time voxel path tracer using SVGF as a denoiser. The initial tests went somewhat well, but I never felt like I quite hit the performance targets I wanted. Here is an early example of an expirement with real time path tracing:

Early iteration of real time voxel path tracer, running at ~80 fps on my laptop.

Note that there is no denoising; however, even without it, the image still converges extremely quickly once the camera is still. I have a few other clips and screenshots showing some different early iterations of this real time voxel path tracer and the different techniques used, including .25spp path tracing and A-Trous denoising. However, none of them got me close enough to the performance I wanted, so I ultimately dropped trying to get it to run in real time. Eventually, I would like to try real time path tracing again.